This article is going to brief about the Puppet Server – Enterprise installation and configuration. We are going to install the puppet server in monolithic mode. In monolithic installation, Puppet Master , Puppet console , Puppet DB will be installing on one node. This is the simple method to evaluate puppet enterprise and you can manage up to 500 puppet agent nodes. To know more about Monolithic installation vs Split installation , please refer this article.

Prerequisites:

- Working DNS Set-up or /etc/hosts

- Puppet Enterprise Package

- Redhat Enterprise Linux 7

Install and Configure Puppet Enterprise: (Puppet Master)

1.Login to RHEL 7 system.

[root@UA-HA ~]# cat /etc/redhat-release Red Hat Enterprise Linux Server release 7.2 (Maipo) [root@UA-HA ~]# uname -a Linux UA-HA 3.10.0-327.el7.x86_64 #1 SMP Thu Oct 29 17:29:29 EDT 2015 x86_64 x86_64 x86_64 GNU/Linux [root@UA-HA ~]#

2.Copy the Puppet Enterprise package to /var/tmp .

[root@UA-HA ~]# cd /var/tmp [root@UA-HA tmp]# ls -lrt puppet-enterprise-2015.3.1-el-7-x86_64.tar.gz -rw-r--r-- 1 root root 336274958 Jan 22 14:33 puppet-enterprise-2015.3.1-el-7-x86_64.tar.gz [root@UA-HA tmp]#

3.Un-compress the package.

[root@UA-HA tmp]# gunzip puppet-enterprise-2015.3.1-el-7-x86_64.tar.gz [root@UA-HA tmp]# tar -xf puppet-enterprise-2015.3.1-el-7-x86_64.tar [root@UA-HA tmp]# ls -lrt -rw-r--r-- 1 root root 338452480 Jan 22 14:33 puppet-enterprise-2015.3.1-el-7-x86_64.tar drwxr-xr-x 8 root root 4096 Jan 22 14:43 puppet-enterprise-2015.3.1-el-7-x86_64 [root@UA-HA tmp]#

4.Navigate to the puppet enterprise directory and list the contents.

[root@UA-HA puppet-enterprise-2015.3.1-el-7-x86_64]# ls -lrt total 408 -rw-r--r-- 1 root root 1225 Dec 23 16:03 README.markdown -rw-r--r-- 1 root root 19151 Dec 23 16:03 LICENSE.txt -rw-r--r-- 1 root root 10189 Dec 23 16:03 environments.rake -rw-r--r-- 1 root root 10394 Dec 23 16:03 db_import_export.rake -rwxr-xr-x 1 root root 110726 Dec 28 12:46 utilities -rw-r--r-- 1 root root 3221 Dec 28 12:46 update-superuser-password.rb -rwxr-xr-x 1 root root 26275 Dec 28 12:46 puppet-enterprise-uninstaller -rwxr-xr-x 1 root root 20598 Dec 28 12:46 puppet-enterprise-support -rwxr-xr-x 1 root root 134721 Dec 28 12:46 puppet-enterprise-installer -rw-r--r-- 1 root root 10235 Dec 28 12:46 pe-code-migration.rb -rw-r--r-- 1 root root 25595 Dec 28 12:46 pe-classification.rb -rw-r--r-- 1 root root 9 Dec 28 12:49 VERSION -rw-r--r-- 1 root root 206 Dec 28 12:49 supported_platforms drwxr-xr-x 3 root root 64 Jan 22 14:43 packages drwxr-xr-x 2 root root 26 Jan 22 14:43 noask drwxr-xr-x 2 root root 4096 Jan 22 14:43 modules drwxr-xr-x 2 root root 31 Jan 22 14:43 gpg drwxr-xr-x 2 root root 4096 Jan 22 14:43 erb drwxr-xr-x 2 root root 4096 Jan 22 14:43 answers [root@UA-HA puppet-enterprise-2015.3.1-el-7-x86_64]#

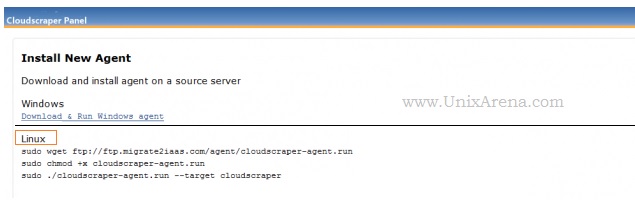

5. Execute the puppet enterprise installer and select the guided installation.

root@UA-HA puppet-enterprise-2015.3.1-el-7-x86_64]# ./puppet-enterprise-installer ================================================================================== Puppet Enterprise v2015.3.1 installer Puppet Enterprise documentation can be found at http://docs.puppetlabs.com/pe/2015.3/ ----------------------------------------------------------------------------------- STEP 1: GUIDED INSTALLATION Before you begin, choose an installation method. We've provided a few paths to choose from. - Perform a guided installation using the web-based interface. Think of this as an installation interview in which we ask you exactly how you want to install PE. In order to use the web-based installer, you must be able to access this machine on port 3000 and provide the SSH credentials of a user with root access. This method will login to servers on your behalf, install Puppet Enterprise and get you up and running fairly quickly. - Use the web-based interface to create an answer file so that you can log in to the servers yourself and perform the installation locally. If you choose not to use the web-based interface, you can write your own answer file, or use the answer file(s) provided in the PE installation tarball. Refer to Answer File Installation (http://docs.puppetlabs.com/pe/2015.3/install_automated.html), which provides an overview on installing PE with an answer file. ?? Install packages and perform a guided install? [Y/n] y Installing setup packages. Please go to https://UA-HA:3000 in your browser to continue installation. Be sure to use https:// and that port 3000 is reachable through the firewall.

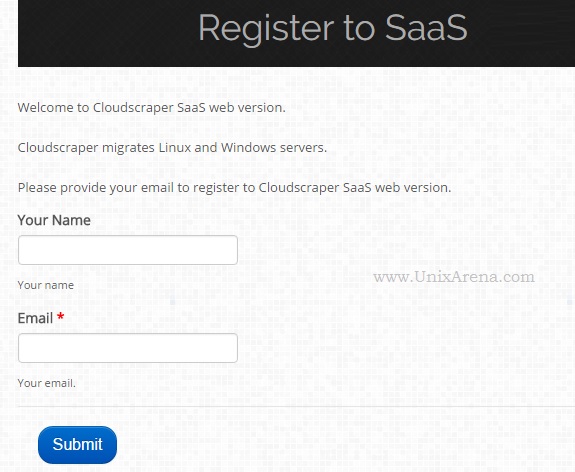

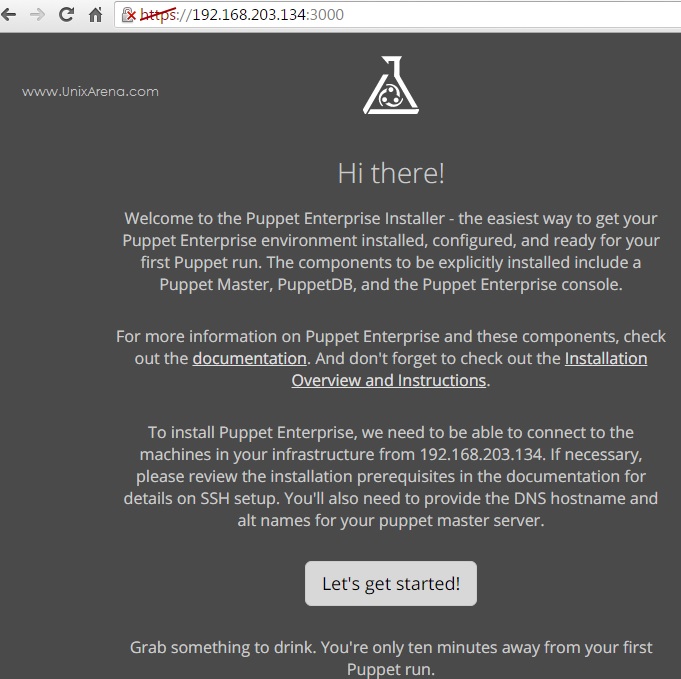

6. Open the browser and enter the URL which is provided in the previous command output. (https://UA-HA:3000).

If you do not have DNS configured , use the IP address.

7. Let’s get started.

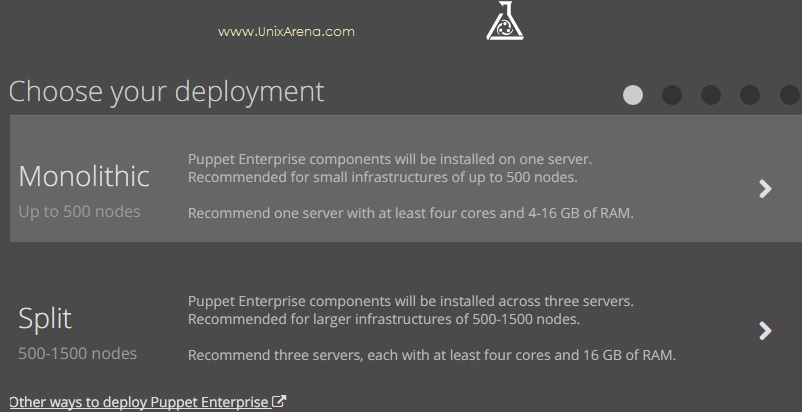

7. Choose monolithic installation.

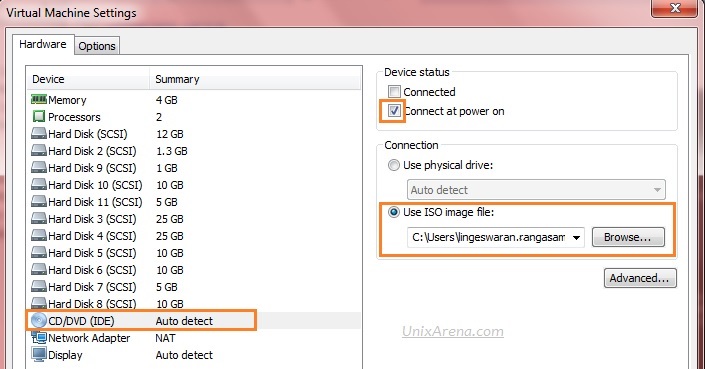

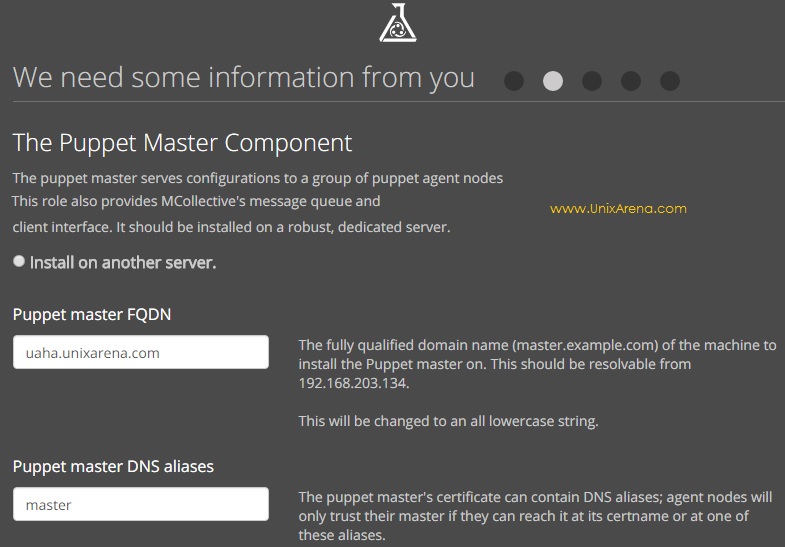

8.Enter the FQDN and host alias.

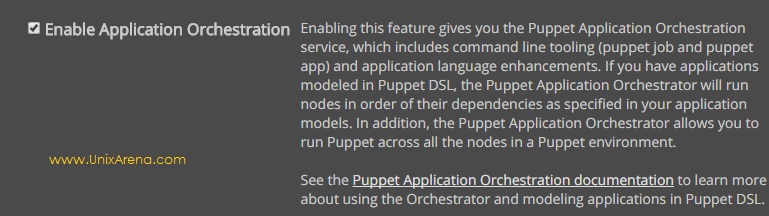

9.Enable application orchestration.

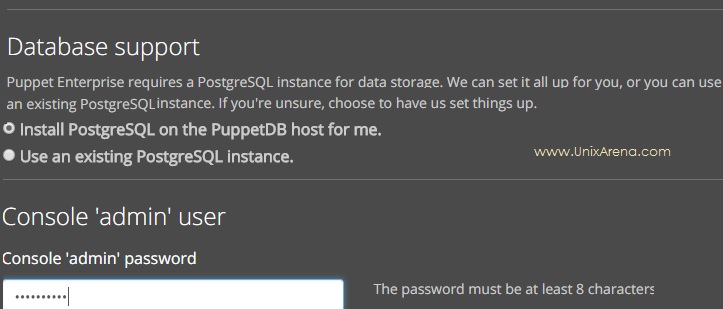

10. Puppet enterprise requires a PostgreSQL instance for data storage. Install PostgreSQL on Puppet DB node. Here you have option to set the “admin” user password.

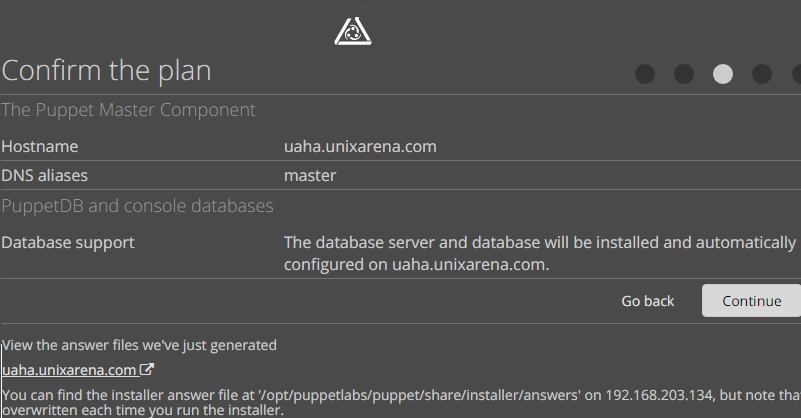

11. Confirm the puppet deployment plan.

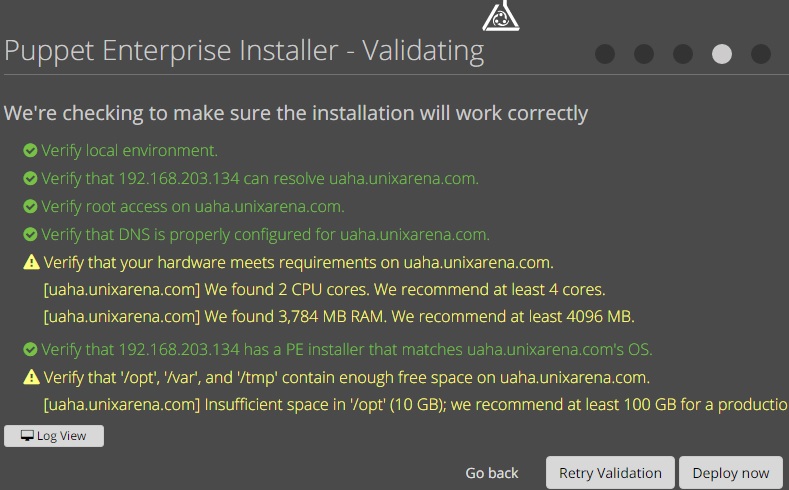

12. Puppet installer will validate the prerequisite. You can safely ignore the warning since it’s for testing purpose. Click on “Deploy Now”.

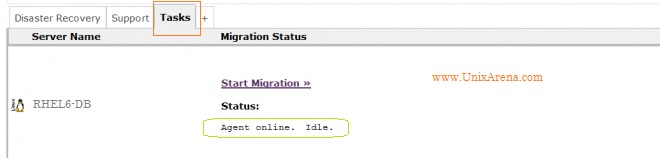

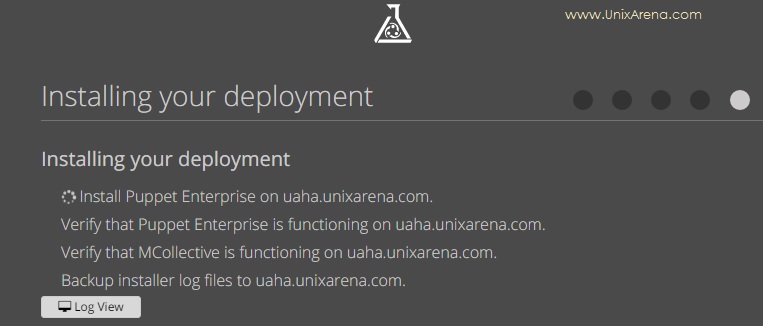

13. You can see that Puppet enterprise is deploying.

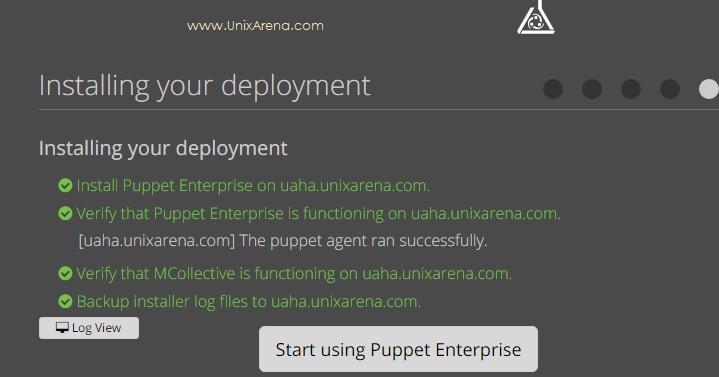

14. Once the installation is completed, you should be able to get the below screen. Click on “Start using Enterprise”.

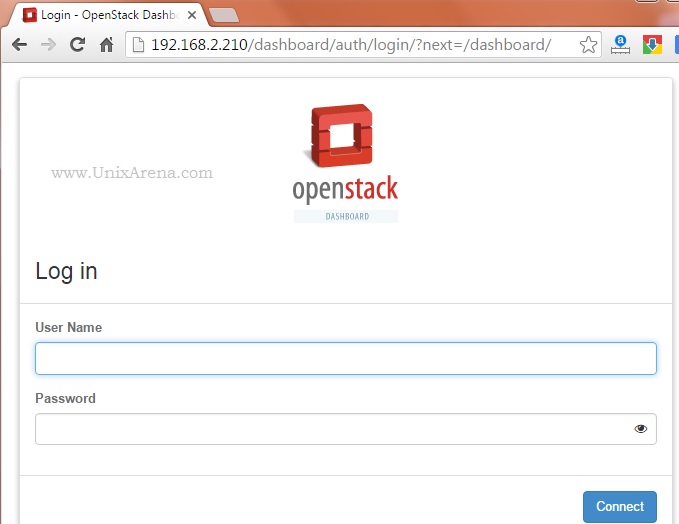

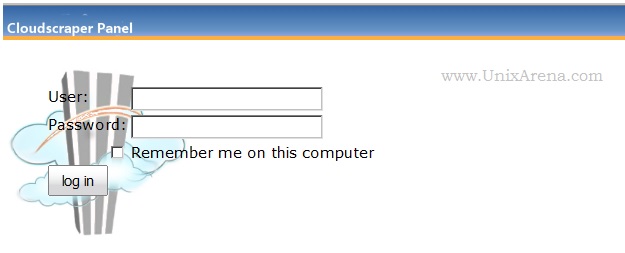

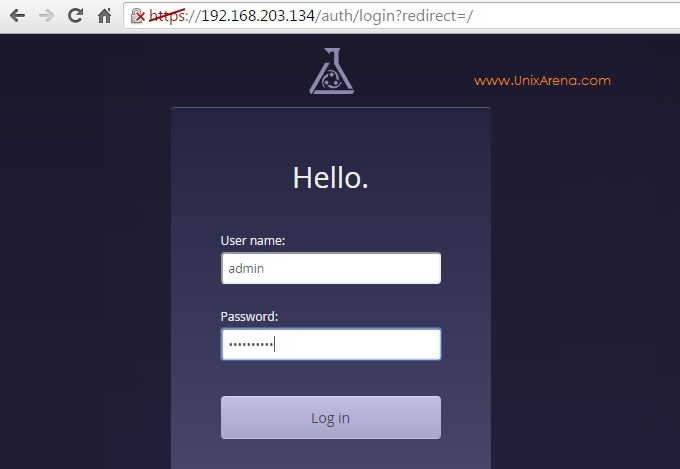

15. You will be automatically redirected to puppet login page.

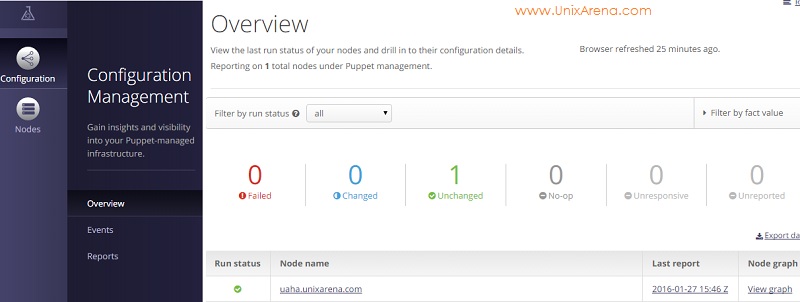

16. Once you have logged in as admin , you will get the overview console like below.

Puppet Version:

[root@UA-HA ~]# puppet --version 4.3.1 [root@UA-HA ~]#

We have successfully deployed the Puppet Enterprise (Puppet Master) on RHEL 7. Hope this article is informative to you . Share it ! Comment it !! Be sociable !!!

The post Puppet – How to install and configure Puppet Enterprise (Master) ? appeared first on UnixArena.