Millions of computers are using bash shell (command interpreter ). New security flaw has been found on bash(Bash Code Injection Vulnerability (CVE-2014-6271) and it allows attackers can take the system control remotely. Heartbleed wave was just over on last april (Openssl vulnerability ).Is Shell-shock hurts more than Heartbleed ? Off-course Yes. Heartbleed was all about sniffing the system memory but Shellshock has opened the door so widely.It’s giving the direct access on the systems. BASH(Bourne-Again SHell) is the default shell in all the Linux flavors(Redhat Linux,Open SUSE,Ubuntu etc..) and oracle Solaris 11. Some of the other operating systems also shipped with bash shell but not a default shell. Red Hat has become aware that the patch for CVE-2014-6271 is incomplete and oracle working on this issue. We can expect the patches for bash shell very soon from operating system vendors. Stay tuned.

How can i find my bash version is vulnerable ? (Bash Shell Remote Code Execution Vulnerability (CVE-2014-6271, CVE-2014-7169)

Redhat – Linux :

1.Make sure bash shell in command search path .

[root@Global-RH ~]# which bash /bin/bash [root@Global-RH ~]#

2.Execute the below command and check the results.

[root@Global-RH ~]# env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

vulnerable

this is a test

[root@Global-RH ~]#Here the command output shows “vulnerability” . It means you are using a vulnerable version of Bash( Shellshock-vulnerability).

Workaround: – Not working

1. Copy the below contents as bash_ld_preload.c to /var/tmp/

#include <sys/types.h>

#include

#include

static void __attribute__ ((constructor)) strip_env(void);

extern char **environ;

static void strip_env()

{

char *p,*c;

int i = 0;

for (p = environ[i]; p!=NULL;i++ ) {

c = strstr(p,"=() {");

if (c != NULL) {

*(c+2) = '\0';

}

p = environ[i];

}

}2. Verify the checksum.

[root@Global-RH ~]# sha256sum bash_ld_preload.c 28cb0ab767a95dc2f50a515106f6a9be0f4167f9e5dbc47db9b7788798eef153 bash_ld_preload.c [root@Global-RH ~]#

3.Make sure you have gcc compiler on the path.

[root@Global-RH ~]# which gcc /usr/bin/gcc [root@Global-RH ~]#

4.Compile the bash_ld_preload.c like below . It will create file called “bash_ld_preload.so”.

[root@Global-RH ~]# gcc bash_ld_preload.c -fPIC -shared -Wl,-soname,bash_ld_preload.so.1 -o bash_ld_preload.so [root@Global-RH ~]# ls -lrt total 112 -rw-r--r--. 1 root root 325 Sep 25 23:16 bash_ld_preload.c -rwxr-xr-x. 1 root root 6201 Sep 25 23:22 bash_ld_preload.so [root@Global-RH ~]#

5.Copy the bash_ld_preload.so to /lib.

[root@Global-RH ~]# cp bash_ld_preload.so /lib/ 6.Create a new file called "/etc/ld.so.preload" and add "/lib/bash_ld_preload.so" to it.

[root@Global-RH ~]# vi /etc/ld.so.preload [root@Global-RH ~]# cat /etc/ld.so.preload /lib/bash_ld_preload.so [root@Global-RH ~]# file /lib/bash_ld_preload.so /lib/bash_ld_preload.so: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, not stripped

7.You need to export the library on the necessary services start up file.Here my system is webserver.So i have added in /etc/init.d/httpd.

[root@Global-RH ~]# grep LD /etc/init.d/httpd LD_PRELOAD=/lib/bash_ld_preload.so export LD_PRELOAD [root@Global-RH ~]#

8.Restart necessary services .

[root@Global-RH ~]# service httpd restart Stopping httpd: [ OK ] ServerName [ OK ] [root@Global-RH ~]#

9.Check “vulnerability ” of bash again using the strings.

[root@Global-RH ~]# env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

this is a test

[root@Global-RH ~]#I see “vulnerability” message is disappeared but expected output is something like below.

[root@Global-RH Desktop]# env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

bash: warning: x: ignoring function definition attempt

bash: error importing function definition for `x'

this is a test

[root@Global-RH Desktop]#How to Fix shellshock bug on Redhat Linux ?

1. Download the below rpm packages for respective OS version and architecture and update the bash rpm.You can download the below mentioned rpm packages from here.

| OS | RPM | Architecture |

| Redhat Enterprise Linux 6 | bash-4.1.2-15.el6_5.2.x86_64.rpm | 64-Bit |

| Redhat Enterprise Linux 6 | bash-4.1.2-15.el6_5.2.i686.rpm | 32-Bit |

| Redhat Enterprise Linux 5 | bash-3.2-33.el5_11.4.x86_64.rpm | 64-Bit |

| Redhat Enterprise Linux 5 | bash-3.2-33.el5_11.4.i386.rpm | 32-Bit |

[root@Global-RH Desktop]# env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

vulnerable

this is a test

[root@Global-RH Desktop]# bash -version

GNU bash, version 4.1.2(1)-release (x86_64-redhat-linux-gnu)

Copyright (C) 2009 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software; you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

[root@Global-RH Desktop]# rpm -qa bash

bash-4.1.2-9.el6_2.x86_64

[root@Global-RH Desktop]#

[root@Global-RH Desktop]# rpm -Uvh bash-4.1.2-15.el6_5.1.x86_64.rpm

warning: bash-4.1.2-15.el6_5.1.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID fd431d51: NOKEY

Preparing... ########################################### [100%]

1:bash ########################################### [100%]

[root@Global-RH Desktop]# env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

bash: warning: x: ignoring function definition attempt

bash: error importing function definition for `x'

this is a test

[root@Global-RH Desktop]#2.Re-load the Libraries:

[root@Global-RH Desktop]# /sbin/ldconfig [root@Global-RH Desktop]# echo $? 0 [root@Global-RH Desktop]#

Do I need to reboot or restart services after installing this update?

No, a reboot of your system or any of your services is not required. This vulnerability is in the initial import of the process environment from the kernel. This only happens when Bash is started. After the update that fixes this issue is installed, such new processes will use the new code, and will not be vulnerable. Conversely, old processes will not be started again, so the vulnerability does not materialize. If you have a strong reason to suspect that a system was compromised by this vulnerability then a system reboot should be performed as a best security practice and security checks should be analyzed for suspicious activity.

Oracle Solaris 10 :

You can use the same set of strings to find whether shellshock is saying “Hello” to Solaris or not . But we can blindly say that all the systems are hitting this bug which has “bash” shell installed.

[root@UA_SOL10 ~]# env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

vulnerable

this is a test

[root@UA_SOL10 ~]#

[root@UA_SOL10 ~]# bash -version

GNU bash, version 3.2.51(1)-release (sparc-sun-solaris2.10)

Copyright (C) 2007 Free Software Foundation, Inc.

[root@UA_SOL10 ~]# pkginfo -l SUNWbash

PKGINST: SUNWbash

NAME: GNU Bourne-Again shell (bash)

CATEGORY: system

ARCH: sparc

VERSION: 11.10.0,REV=2005.01.08.05.16

BASEDIR: /

VENDOR: Oracle Corporation

DESC: GNU Bourne-Again shell (bash) version 3.2

PSTAMP: sfw10-patch20120813130538

INSTDATE: Feb 13 2014 16:13

HOTLINE: Please contact your local service provider

STATUS: completely installed

FILES: 4 installed pathnames

2 shared pathnames

2 directories

1 executables

1552 blocks used (approx)

[root@UA_SOL10 ~]# exitHow to fix shellshock bug on Oracle Solaris 10 ?

1. Download the patches from https://support.oracle.com .The following table lists the IDRs or patches required to resolve the vulnerabilities described in CVE-2014-6271 and CVE-2014-7169.

| CVE | Solaris 11.2 | Solaris 11.1 | Solaris 10 | Solaris 9 | ||

| SPARC | x86 | SPARC | x86 | |||

| CVE-2014-6271 CVE-2014-7169 | 11.2.2.7.0 | IDR1400.2 (applies to Solaris 11.1 to Solaris 11.1 SRU12.5)IDR1401.2 (applies to Solaris 11.1 SRU13.6 to Solaris 11.1 SRU21.4.1) | 126546-06 | 126547-06 | 149079-01 | 149080-01 |

| CVE-2014-7186 CVE-2014-7187 | IDR1404.1 | IDR1400.2 (applies to Solaris 11.1 to Solaris 11.1 SRU12.5) IDR1401.2 (applies to Solaris 11.1 SRU13.6 to Solaris 11.1 SRU21.4.1) | IDR151577-02 | IDR151578-02 | IDR151573-02 | IDR151574-02 |

2.This Solaris 10 patch has dependencies . So you need to download the below patch if your system is not having that.

3.Copy those patches to the system and perform the patch installation.My system was not having 126547-05 patch. So let me install it first.

UASOL1:#showrev -p |grep 126547 Patch: 126547-04 Obsoletes: Requires: Incompatibles: Packages: SUNWbash, SUNWsfman UASOL1:# UASOL1:#unzip 126547-05.zip > /dev/null UASOL1:#ls -lrt total 12057 drwxr-xr-x 5 root root 14 Nov 19 2013 126547-05 -rwx------ 1 root root 328477 Sep 26 13:36 p19689293_1000_Solaris86-64.zip -rwx------ 1 root root 434305 Sep 26 13:38 p19689287_1000_SOLARIS64.zip -rwx------ 1 root root 2615963 Sep 26 13:39 126546-05.zip -rwx------ 1 root root 2510125 Sep 26 13:40 126547-05.zip UASOL1:# UASOL1:#patchadd /var/tmp/shellshock/126547-05 Validating patches... Loading patches installed on the system... Done! Loading patches requested to install. Done! The following requested patches have packages not installed on the system Package SUNWbashS from directory SUNWbashS in patch 126547-05 is not installed on the system. Changes for package SUNWbashS will not be applied to the system. Checking patches that you specified for installation. Done! Approved patches will be installed in this order: 126547-05 Checking installed patches... Executing prepatch script... Installing patch packages... Patch 126547-05 has been successfully installed. See /var/sadm/patch/126547-05/log for details Executing postpatch script... Patch packages installed: SUNWbash SUNWsfman UASOL1:#

4. Let me install the shellshock patch.

UASOL1:#unzip p19689293_1000_Solaris86-64.zip > /dev/null

UASOL1:#unzip IDR151578-01-735861361.zip > /dev/null

UASOL1:#patchadd /var/tmp/shellshock/IDR151578-01

Validating patches...

Loading patches installed on the system...

Done!

Loading patches requested to install.

Done!

Checking patches that you specified for installation.

Done!

Approved patches will be installed in this order:

IDR151578-01

Checking installed patches...

Executing prepatch script...

#############################################################

INTERIM DIAGNOSTICS/RELIEF (IDR) IS PROVIDED HEREBY "AS IS",

TO AUTHORIZED CUSTOMERS ONLY. IT IS LICENSED FOR USE ON

SPECIFICALLY IDENTIFIED EQUIPMENT, AND FOR A LIMITED PERIOD OF

TIME AS DEFINED BY YOUR SERVICE PROVIDER. ANY PROGRAM

MODIFIED THROUGH ITS USE REMAINS GOVERNED BY THE TERMS AND

CONDITONS OF THE ORIGINAL LICENSE APPLICABLE TO THAT

PROGRAM. INSTALLATION OF THIS IDR NOT MEETING THESE CONDITIONS

SHALL WAIVE ANY WARRANTY PROVIDED UNDER THE ORIGINAL LICENSE.

FOR MORE DETAILS, SEE THE README.

#############################################################

Do you wish to continue this installation {yes or no} [yes]?

(by default, installation will continue in 60 seconds)

yes

Installing patch packages...

Patch IDR151578-01 has been successfully installed.

See /var/sadm/patch/IDR151578-01/log for details

Executing postpatch script...

Patch packages installed:

SUNWbash

UASOL1:#Let me test vulnerable using echo command.

UASOL1:#env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

bash: warning: x: ignoring function definition attempt

bash: error importing function definition for `x'

this is a test

UASOL1:#Looks good. Patch works perfectly!!!

How to fix shellshock bug on Oracle Solaris 11.2:

(Refer Above table for patch information)

1.Check the vulnerable of bash and check the bash & OS release version.

UA_SOL11:~#env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

vulnerable

this is a test

UA_SOL11:~#bash -version

GNU bash, version 4.1.11(2)-release (i386-pc-solaris2.11)

Copyright (C) 2009 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software; you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

UA_SOL11:~#pkg info bash

Name: shell/bash

Summary: GNU Bourne-Again shell (bash)

Category: System/Shells

State: Installed

Publisher: solaris

Version: 4.1.11

Build Release: 5.11

Branch: 0.175.2.0.0.42.1

Packaging Date: June 23, 2014 02:15:58 AM

Size: 2.98 MB

FMRI: pkg://solaris/shell/bash@4.1.11,5.11-0.175.2.0.0.42.1:20140623T021558Z

UA_SOL11:~#uname -a

SunOS SAN 5.11 11.2 i86pc i386 i86pc

UA_SOL11:~#2.Copy the downloaded p5p package to the system and unzip it .

UA_SOL11:~#ls -lrt total 4103 -rwx------ 1 root root 2033944 Sep 26 14:35 p19687137_112000_Solaris86-64.zip UA_SOL11:~#unzip p19687137_112000_Solaris86-64.zip > /dev/null UA_SOL11:~#ls -lrt total 8976 -rw-r--r-- 1 root root 2416640 Sep 25 18:32 idr1399.1.p5p -rw-r--r-- 1 root root 433 Sep 25 18:48 README.idr1399.1.txt -rwx------ 1 root root 2033944 Sep 26 14:35 p19687137_112000_Solaris86-64.zip UA_SOL11:~#

3.I have tried to install directly without setting publisher but failed.

UA_SOL11:~# pkg install -g ./idr1399.1.p5p idr1399.1 pkg install: The proposed operation on this parent image can not be performed because temporary origins were specified and this image has children. Please either retry the operation again without specifying any temporary origins, or if packages from additional origins are required, please configure those origins persistently. UA_SOL11:~#

4.Let me set the publisher for this patch .

UA_SOL11:~#pkg set-publisher -g file:///var/tmp/shellshock/idr1399.1.p5p solaris UA_SOL11:~# UA_SOL11:~#pkg publisher PUBLISHER TYPE STATUS P LOCATION solaris origin online F file:///var/tmp/shellshock/idr1399.1.p5p/ solaris origin online F http://192.168.2.49:909/ UA_SOL11:~#

5.Install the patch using pkg command.

UA_SOL11:~#pkg install idr1399

Packages to install: 1

Packages to update: 1

Create boot environment: No

Create backup boot environment: Yes

Release Notes:

# Release Notes for IDR : idr1399

# -------------------------------

Release : Solaris 11.2 SRU # 2.5.0

Platform : COMMON

Bug(s) addressed :

19682871 : Problem with utility/bash

Package(s) included :

pkg:/shell/bash

Removal instruction :

# /usr/bin/pkg update --reject pkg://solaris/idr1399@1,5.11 pkg:/shell/bash@4.1.11,5.11-0.175.2.0.0.42.1:20140623T021558Z

Generic Instructions :

1) If system is configured with 'Zones', ensure that IDR is available in a configured repository.

2) When removing IDR, you may NOT have all packages

specified in "Removal instruction" installed on the system.

Thus put only those packages in removal which are installed on the system

Special Instructions for : idr1399

None.

DOWNLOAD PKGS FILES XFER (MB) SPEED

Completed 2/2 10/10 0.5/0.5 35.5M/s

PHASE ITEMS

Removing old actions 3/3

Installing new actions 14/14

Updating modified actions 3/3

Updating package state database Done

Updating package cache 1/1

Updating image state Done

Creating fast lookup database Done

Updating package cache 1/1

UA_SOL11:~#6.Let me test the vulnerable again.

UA_SOL11:~#env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

bash: warning: x: ignoring function definition attempt

bash: error importing function definition for `x'

this is a test

UA_SOL11:~#Cool. Works perfectly for Solaris 11 too.

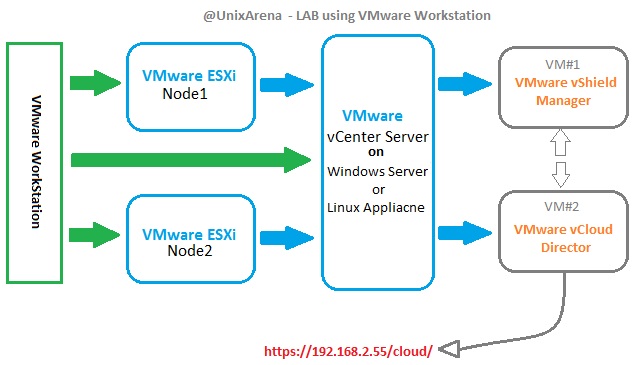

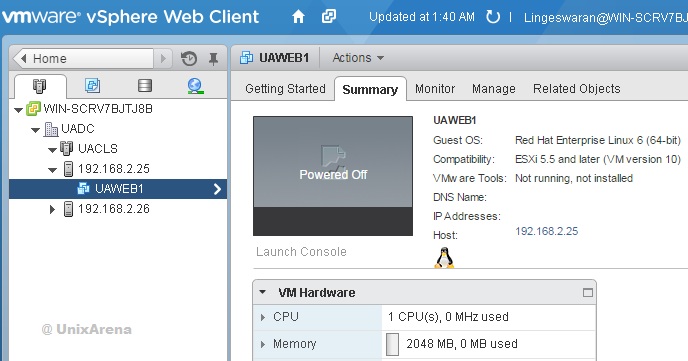

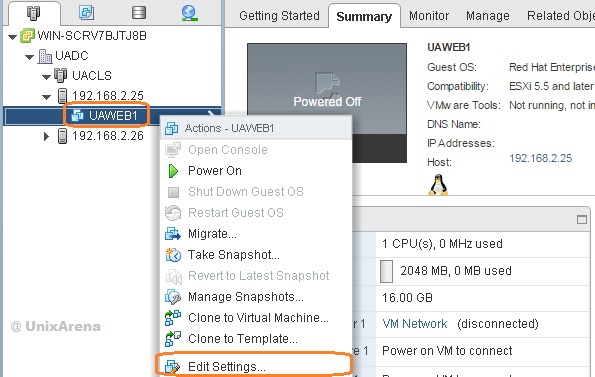

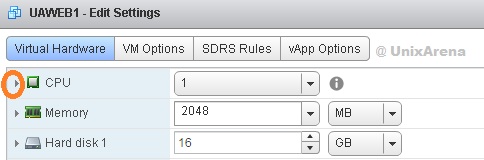

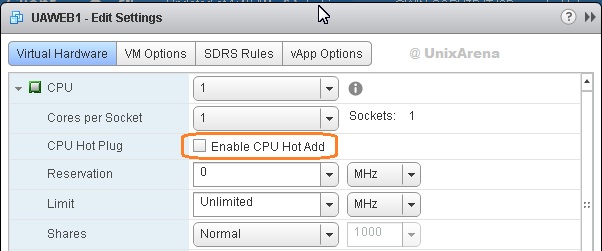

VMware ESXi:

VMware has its own shell and not using bash. Even you can’t install bash on esxi . According to their security blog, VMware investigating bash command injection vulnerability aka Shell Shock (CVE-2014-6271, CVE-2014-7169). It may be applicable for ESX 3.5 & ESX4 versions.

ESXi 4.0, 4.1, 5.0, 5.1, and 5.5 are not affected because these versions use the Ash shell (through busybox), which is not affected by the vulnerability reported for the Bash shell.

ESX 4.0 and 4.1 have a vulnerable version of the Bash shell.

There are Linux appliances from VMware had this vulnerable bash shell.Here you can download the patch to fix it.

- VMware vCenter Server Appliance 5.0 U3b – download

- VMware vCenter Server Appliance 5.1 U2b – download

- VMware vCenter Server Appliance 5.5 U2a – download

To know more about VMware Appliance vulnerability , Please check it in VMware KB

Sources:

- https://access.redhat.com/articles/1200223?sc_cid=70160000000e8eaAAA&

- https://community.oracle.com/thread/3612189

- https://access.redhat.com/articles/1200223

- https://securityblog.redhat.com/2014/09/24/bash-specially-crafted-environment-variables-code-injection-attack/

- https://rhn.redhat.com/rhn/errata/details/Packages.do?eid=27888

- http://blogs.vmware.com/security/2014/09/vmware-investigating-bash-command-injection-vulnerability-aka-shell-shock-cve-2014-6271-cve-2014-7169.html

- https://community.oracle.com/thread/3612825

- http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2090740

The post Shellshock bug – vulnerability on Bash shell appeared first on UnixArena.